In this post I’ll show you how I collect my solar production data from the SolarEdge API and send it to Log Analytics. Notice I said collect and not monitor. While the SolarEdge API is actually fairly well documented, the API is limited to calls at a 15 minute interval. In the SolarEdge App and website I can see the current output from each inverter in the system. But the API does not give you such granular information. Another issue I’ve seen is the Zigbee module that sends your data to SolarEdge from your location sometimes lags behind real time by hours. In addition while the system produces logs, I am not worthy to to view said logs. With SolarEdge your installer controls everything. I have asked my installer to grant me access to the logs, but they have not obliged.

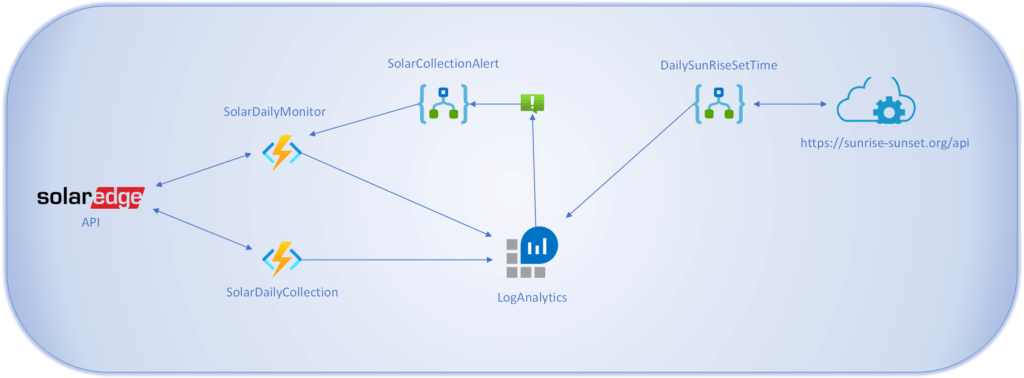

My setup to collect them will be an PowerShell Azure Function that sends the data to Log Analytics. You don’t have to use Log Analytics, once you have the data you can send them wherever you like, even CosmosDB.

Requirements

- SolarEdge API Key

- SolarEdge Site Number

- 2 PowerShell Core Azure Functions

- Log Analytics Workspace

- Log Analytics workspace ID and key

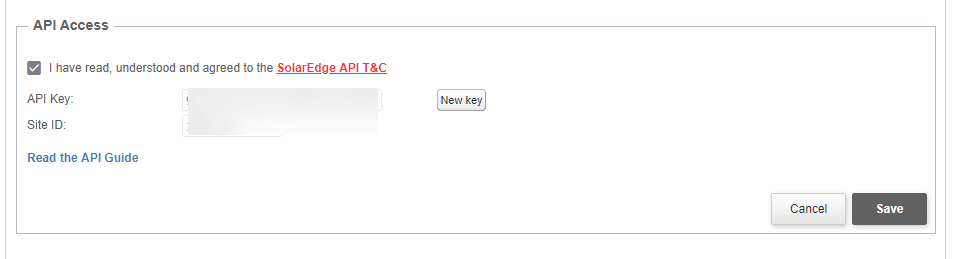

Create your SolarEdge API Key

With your SolarEdge account go to https://monitoring.solaredge.com/ then Admin -> Site Access and create your API and copy the Site ID and API Key

Azure Functions

For my data collection, I have two Azure Functions. One that runs 1 time per day at 10PM to collect the total of that days production. The other Azure Function runs every 15 minutes. However, I did not want it running all night collecting 0’s for me. So I have it dynamically set to turn off and turn on at sunrise and sunset. I did that by Creating a Custom Log with the sunrise and sunset times for my location. And then creating an Alert that fires a Logic App that turns the function off and on at sunrise and sunset.

In both Function Apps I have setup apikey & site for SolarEdge information. And ID and Key for log analytics.

Inside the functions we’ll use:

$env:apikey, $env:site, $env:ID, $env:key to get the information inside PowerShell.

Post to Log Analytics

I’ll use the same PowerShell Core code from my Weather Collection Function App

Function Build-Signature ($customerId, $sharedKey, $date, $contentLength, $method, $contentType, $resource)

{

$xHeaders = "x-ms-date:" + $date

$stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($sharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$authorization = 'SharedKey {0}:{1}' -f $customerId,$encodedHash

return $authorization

}

# Create the function to create and post the request

Function Post-LogAnalyticsData($customerId, $sharedKey, $body, $logType)

{

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

$signature = Build-Signature `

-customerId $customerId `

-sharedKey $sharedKey `

-date $rfc1123date `

-contentLength $contentLength `

-fileName $fileName `

-method $method `

-contentType $contentType `

-resource $resource

$uri = "https://" + $customerId + ".ods.opinsights.azure.com" + $resource + "?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature;

"Log-Type" = $logType;

"x-ms-date" = $rfc1123date;

"time-generated-field" = $TimeStampField;

}

$response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing

return $response.StatusCode

}

# Submit the data to the API endpoint

Post-LogAnalyticsData -customerId $customerId -sharedKey $sharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($solar)) -logType $logType

DayTime Collection

The purpose of this function is to collect “real time” data during the day. As mentioned above the API is limited to 15 minutes so thats what this function is set to.

# Replace with your Workspace ID $CustomerId = $env:ID # Replace with your Primary Key $SharedKey = $env:key # Specify the name of the record type that you'll be creating $LogType = "SolarMonitor" $TimeStampField = get-date $TimeStampField = $TimeStampField.GetDateTimeFormats(115)

$powerURI = "https://monitoringapi.solaredge.com/site/" + $env:site + "/overview?api_key=" + $env:apikey

$statusURI = "https://monitoringapi.solaredge.com/site/" + $env:site + "/details?api_key=" + $env:apikey

$invURI = "https://monitoringapi.solaredge.com/site/" + $env:site + "/inventory?api_key=" + $env:apikey

$power = invoke-restmethod -uri $powerURI

$status = invoke-restmethod -uri $statusURI

$inv = invoke-restmethod -uri $invURI

#Build JSON Output, grabbing Inventory data, connected Optimizers and Status

$solar = $status.details | select-object Status, peakpower, lastupdatetime

$westarray = $inv.inventory.inverters | where-object {$_.name -eq "Inverter 2"}

$eastarray = $inv.inventory.inverters | where-object {$_.name -eq "Inverter 1"}

#Build final JSON adding Current Power, Total Power for the day and inventory data to Solar variable

$solar | add-member -name WestInverter -value $westarray.name -MemberType NoteProperty

$solar | add-member -name WestCPUVersion -value $westarray.cpuVersion -MemberType NoteProperty

$solar | add-member -name WestPanelCount -value $westarray.connectedOptimizers -MemberType NoteProperty

$solar | add-member -name EastInverter -value $eastarray.name -MemberType NoteProperty

$solar | add-member -name EastCPUVersion -value $eastarray.cpuVersion -MemberType NoteProperty

$solar | add-member -name EastPanelCount -value $eastarray.connectedOptimizers -MemberType NoteProperty

$solar | add-member -name CurrentOutput -value $power.overview.currentPower.power -MemberType NoteProperty

$solar | Add-Member -name TodaysOutput -value $power.overview.lastDayData.energy -MemberType NoteProperty

#Conver to JSON for Log Analytics Payload

$solar = convertto-json $solar

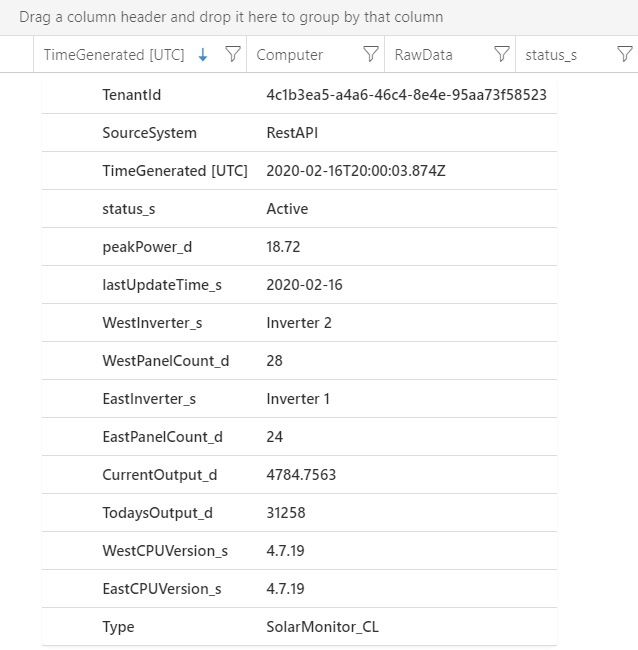

This section is where I collect all the Solar Production data & system inventory data. There are 3 different URIs to collect this data. I then filter only the data I want, and then add them all to the $solar object.

This function is setup to run every 15 minutes. By using this setting. 0 */15 * * * *

And as mentioned it gets turned off and on by an Azure Monitor Alert via a LogicApp. I did this because I did not want to collect 0’s all night.

Daily Total Production Collection

The purpose of this function is to collect the total production every day. The other problem with the SolarEdge API is their collection can sometimes lag hours behind whats live. Did I mention this isn’t a true monitoring solution? So this function runs every night at 10PM local time and sends the total production for the day to my workspace. This function is also completely separate from the above function, as I have that one set to turn off at night.

$uri = "https://monitoringapi.solaredge.com/site/" + $env:site + "/overview?api_key=" + $env:apikey $coll = invoke-restmethod -uri $uri $solar = New-Object -TypeName psobject $solar | add-member -name Date -value $coll.overview.lastUpdateTime -membertype NoteProperty $solar | add-member -name Wh -value $coll.overview.lastdaydata.energy -membertype NoteProperty $solar = convertto-Json $solar

To collect only one time per day, I set the Schedule to this

0 0 3 * * *

This has it run 1 time per day at 3am UTC time, which is 10pm my time.

Log Analytics

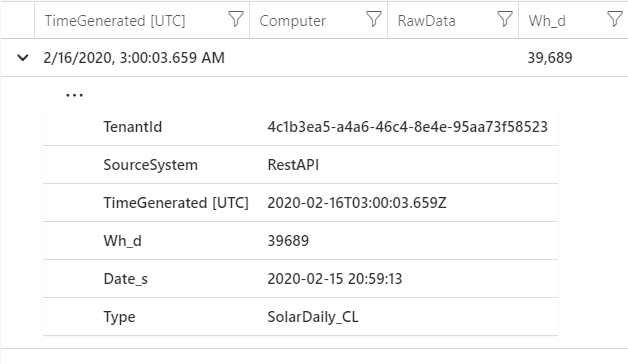

Once logs start flowing they’ll look like this for the SolarMonitor log

and this for the SolarDaily log.

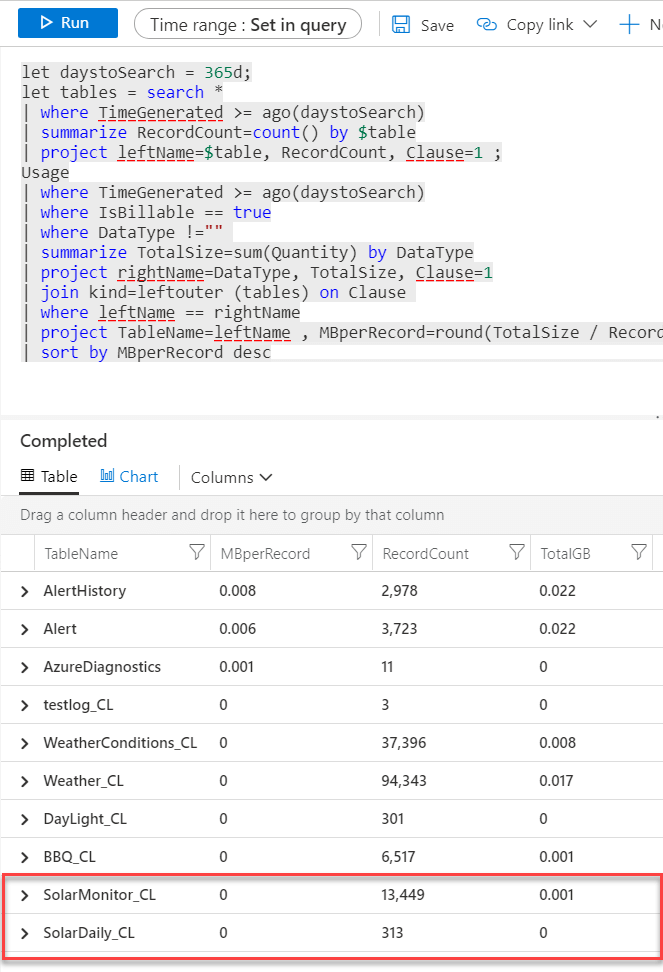

Of course, whats the log ingestion cost? Pretty much nothing.

Summary

In summary this is the full architecture for how I collect my Solar production data from SolarEdge and send to Log Analytics. In total 3 Custom Logs. One for Sunrise/Sunset times, this one also provide the bonus information of daylight hours for that day. One for daily total production and one for daily production in 15 minute intervals. In future posts I’ll be showing how to visualize this data in Azure Monitor Workbooks. You can find all code for this project on my github here.

Related Blog Posts:

Grab Sunrise time from your GPS Location. https://www.systemcenterautomation.com/2019/05/custom-log-analytics-logs-logicappsps/

Use LogicApp to turn off and on Azure Function from Azure Monitor Alert. https://www.systemcenterautomation.com/2020/02/azure-monitor-alert-logicapp/

Set Managed Identity for LogicApp on Azure Functions. https://www.systemcenterautomation.com/2019/04/managed-identity-logic-app/